Thoughts on Interviewing

I think I'm destined to always be an iconoclast when it comes to interviews. Not quite so much as the days when "let's ask programmers some questions about programming" was a controversial idea, but I still find that when I introduce people to the way I do things they're sceptical until they see the results.

There's probably not much point me going over "how to run an interview" as a cursory glance of my LinkedIn feed suggests you'd have a hard time not stumbling over such an article. So instead I'm going to talk about what I do that's different, and why I do it. Then I'm going to upset everyone with a viewpoint that's way "out there" in current hiring contexts.

Two Dimensions of Candidate Skill

Being good at interviews is not the same as being good at the job. A trivial example is the developer who writes beautiful, well-structured and fully tested code in a pairing exercise, but in the real world is unable to work with anything that falls short of such perfection.

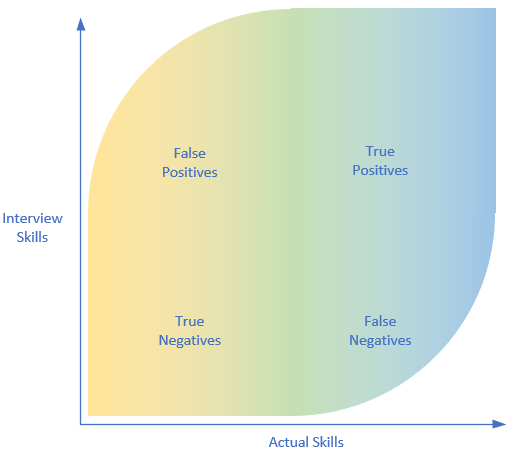

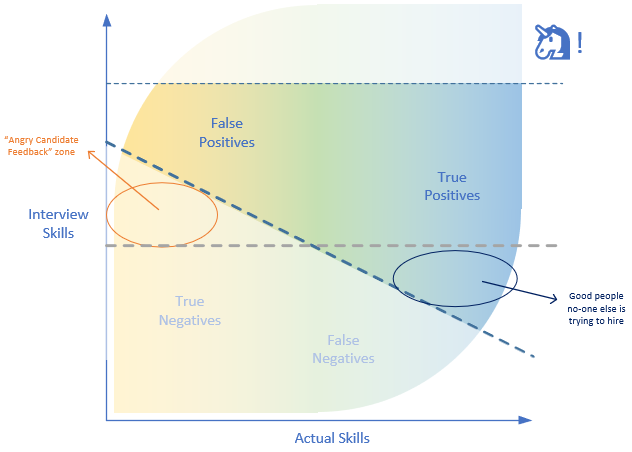

There are some limits to this - assuming a reasonable process, you wouldn't expect someone totally hopeless to turn in a flawless performance. You need some genuine skill to ace a difficult interview. Similarly, anyone with sufficient genuine skill is unlikely to totally fail a process. The consequence is you end up with something looking like this:

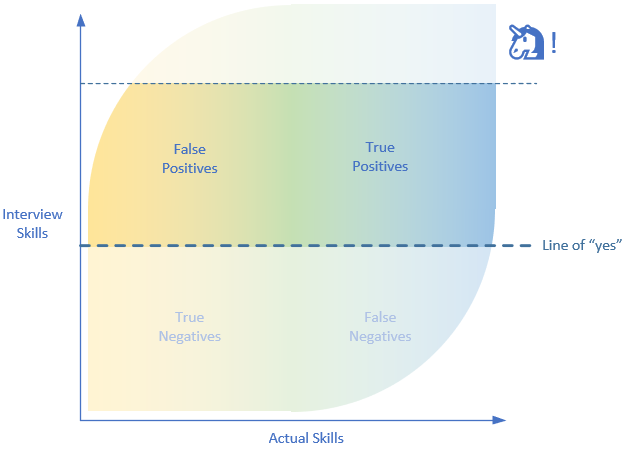

Now let's add some reality. You probably have a line below which you won't make an offer. But also chances are you aren't Netflix or Google; there are people with a higher budget or a more compelling offer than yours, and they're getting access to candidates you don't have access to. With those lines drawn, the picture looks like this:

This is pretty normal for an organisation with a standard setup of a phone screen, a technical interview including both questions and some form of coding exercise, and a face to face that's a bit more soft-skill and behavioural. In this situation, you're annoyed by the number of candidates you reject, the number you lose to better offers, and the number who get through and turn out to be unable to perform the job.

Common Approaches That Don't Work

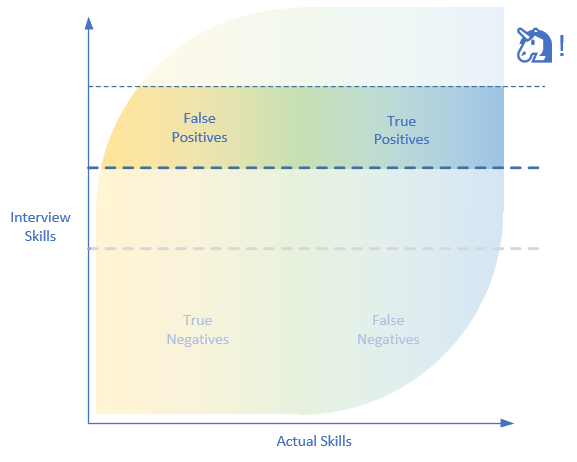

The first response to this is to go, "if people who can't do the job are getting through our process, we need to make the passing grade higher! After all, Google/Apple/etc. interviews have a reputation of being really tough, and look how great their employees are."

What does that look like on the quadrant?

Something to note: the ratio of false positives did go down. This is because you put your passing grade all the way up into the level where you need some genuine skill to achieve it. But... you are not Amazon. Your offer and hiring budget are not compelling enough to get into that sweet spot where you have to be so good to get through the interview process that anyone passing it has some level of basic competence, even if they're on the unfortunate side of the quadrant. And the closer you put your passing grade to that unicorn line, the smaller your candidate pool is going to be.

This is how you end up being the organisation which spends vast reserves of time and energy in trying to recruit people, without ever actually hiring anyone. (Most likely in-house, because if you do this long enough then sensible agencies will give up talking to you.)

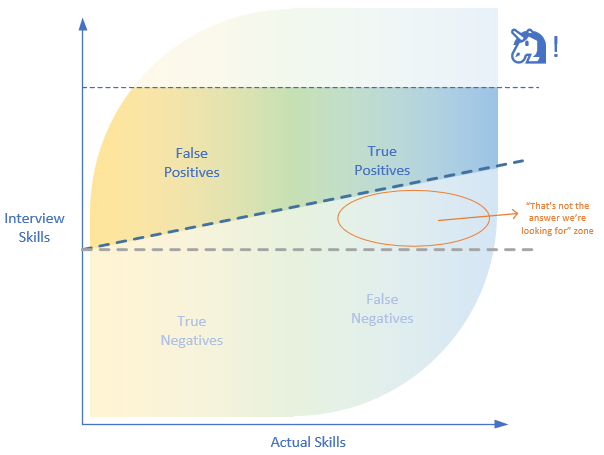

The next approach organisations commonly take is not to make the interview harder, but to formalise it. This driven from the sensible realisation that false positives often weren't asked a particular question which would have highlighted the problem, or gave an answer which sounded good but shouldn't have been accepted.

This is what the quadrant looks like if you do that:

Wait, what? That diagonal looks utterly bizarre, but it makes sense if you think about it in terms of the quadrant. Formalising your interview process does not penalise people who are good at interviews - if they're able to give you the model answer, then having a document which says "this is the model answer" doesn't change their outcome.

But skilled people - ah. Skilled people will over-answer, give unexpected answers, or come out with something completely bizarre. Let's say you ask, "how would you scale this problem to a million users?" and your model answer is, "use a clustered database". What if they tell you the workload is read-heavy and it can be cached at the edge? What if they tell you they've got a lot of experience with that particular problem, and within the parameters you've set a million users is comfortably achievable on a single instance, and you only need to worry about redundancy and not performance? What if they contributed to such a database, pull up GitHub and spend half an hour talking about how they deal with all of the partitioning and consistency concerns, and you don't get time to ask all the other questions on your sheet?

(This blog on failing a Director of Engineering interview is an entertaining read on how this can go wrong in the real world, if you have the time.)

So these ideas fail. What does work?

Don't Interview for Interview Skills

This is where I get to the long-awaited iconoclasm. I very rarely give people long lists of technical questions, or ask them about SOLID, or any of that. I only really care about two things when I'm interviewing a candidate:

- Can they work with us, in our working environment?

- Is our organisational journey compatible with their personal journey?

That second point sounds like some kind of bollocks from an inspirational manual, so I'll leave it alone for now and talk about the first bit.

I once made a strong recommendation on someone who not only failed to complete a pair programming exercise, but didn't even write a single line of code. Why? Because we'd made an error and given them a broken laptop to do the exercise on. The recommendation came because their immediate reaction was to try to fix the laptop. Instead of getting annoyed with us, they realised it was a genuine mistake and worked with me and the co-interviewer to get a development environment set up and installed in exactly the way I'd want to see if someone came in to work on Wednesday morning and found their OS installation had been borked by an overnight patch.

And yes, that turned out to be a true positive, not a false one!

So what does this look like on the quadrant, when you bring someone in and get as close as you can to actual work in an interview environment?

The first surprise is how much angry feedback it generates. It's not unusual for me to end up having to placate a recruitment agent by telling them that while their candidate might interview well elsewhere, our experience was that they threw a meltdown-level tantrum when we asked them to help debug a coding problem as part of their phone screen. This is not a sign you're getting it wrong, it's a sign you're starting to weed out people who have interview skills that aren't backed up by real performance.

The second surprise is the implication of that lower-right triangle; quite how many good (and in some cases very good) people aren't getting hired for stupid reasons. Sadly, some of the most common are language and cultural barriers. As an example, I know it's one of those HR sacred cows, but rejecting people for being bluntly honest about why they're leaving their current company is a big institutional bias against candidates from Germanic, Northern European and East European countries.

Designing representative exercises

Designing a truly representative interview exercise is hard. It's rare for an organisation to be able to bring people into a team and have them work on some real code - chances are there's too much context to learn, and if you're in any kind of secure environment your assessor is going to distribute their toys far and wide from the pram.

So the key is to have a simple problem which is understandable, and then try to get the process of solving it as close to your actual process as possible in an interview context. Mostly this is about changing your own behaviour as interviewers. You have to learn to act as you would with a co-worker. A good example is a candidate who doesn't write any tests during a programming exercise. The bad outcome is you say nothing then fail them with a sneered, "ooh, you didn't write any tests, what an awful developer". A less-obvious bad outcome is you do mention it, then mark them down for needing prompting. The reason is that if you did these things for real in your day-to-day work, your team is pretty broken.

So what do you evaluate them on? Well, what would you want in your real environment? For me, it would be how they react to the suggestion we should write some tests now. If they go, "oh yes, thanks for reminding me" and switch to TDD mode, that's cool - that's what I see happen in practice. If they have a good reason for not writing the tests right now, also fine. Maybe they find it faster to iterate, stabilise and then codify in test. The "no" here for me would be someone who completely ignores the suggestion, or embarks on a rant about how tests are an awful idea as all their code is perfectly correct first time.

For this reason, I'd caution against trying to make your exercise "realistic" with too much ambiguity, trick requirements or broken code unless you're actually going to call them out and ask the candidate how they'll approach this. My experience inheriting a few of these was that they ended up being randomisation factors - some people spotted them, some people didn't, and it had no correlation with how good or bad they were once at a desk with a new contract.

Some good exercises to start with (you can combine more than one):

- Write a console application to process this file.

- This solution has some failing tests, fix them.

- Extend this small application to do x.

- Design an architecture/infrastructure for this.

- Help us debug this problem.

Finally, be clear about how you're assessing the exercise; "what good looks like" wouldn't be something you keep a secret from new team members, so if you want a candidate to write tests, consider memory usage and handle common file system errors then tell them up-front that's what you want them to do. I also make sure everyone knows whether they're expected to complete the exercise or not.

That thing about the journey

This is the "soft" part. It's been a while since I said something objectionable and I want to keep the momentum going as I work up to the big one, so here goes: I don't see much value in behavioural/situational questions. If people have the right values and mindset, they'll usually respond in an appropriate way the first time they encounter a situation, even if that's merely asking for help. Conversely, if they have the wrong values then they're likely to respond in the wrong way, no matter how good the answer they gave you about how they handled a conflict.

Which values are the right ones very much depend on the organisational journey. If my company is stable and highly structured, I don't want people who are freewheeling, disorganised and liable to question everything. But if I'm in the midst of transformation-induced flux and my market changes every day? Hell yes!

I use the word "journey" here as it's somewhat distinct from "values". For me, values are a point in time, whereas the journey also represents the change in those values. Take a large command-and-control enterprise company that is trying to become a decentralised, product-driven organisation. Its values are very much hierarchy, stability, process and conformity. But its journey is breaking those apart and replacing them. Similarly, it might hire someone from a startup background whose journey is learning to engage with people who don't consider a push scooter a viable means of transport.

How do you find out? If you have the right conversations, it's hard not to find out. A few postings ago I remember horrifying my then-boss by telling someone who'd been talking about their love of agile environments in an interview, "we're not really doing agile - those Kanban boards are from a failed experiment and we don't update them. We have a lot of process, and most of the daily conversations around software are budget-related. I don't think there's any great appetite to change that on the floor, other than a few isolated teams." What happened is the candidate perked up and started talking about how we could change things, what they could do, and if the position we were hiring was in one of those isolated teams. It was really obvious that we had compatible journeys - the organisation wanted to change, and the candidate was keen to use their agile knowledge for something more than keeping things ticking along.

A different example which ended in no offer was a candidate I interviewed who seemed to have all the right values, but something about the questions they asked nagged at me. So I asked, "how would you respond if we didn't do that?" The answer I got was a proper horror - including the immortal phrase, "you're not offering enough that you can decide not to accede to my requests, I know how good I am." The resulting conversation made us realise their journey didn't match ours at all - for a positive outcome, they needed to go to an organisation where they weren't the best developer in the team, and we couldn't provide that at the time.

It's hard to codify this kind of skill into a suitably short block of text, but really it's talking about the challenges you have as an organisation and the realities of your environment and seeing how people respond to it. I hesitate to add this as it's a little non-inclusive if you approach it the wrong way, but a few good managers I've worked with had this down as, "someone I'd talk about this over a pint with."

Any Questions?

Here's the bit to disagree with.

There's a recent trend in interviewing to make the dusty old, "do you have any questions for us?" section strongly evaluative. Please don't. If you're one of the thankfully few organisations that do this and pretend to candidates they're not marked on the questions they ask, then you may be better served by erecting a sign saying Run Away Very Fast outside your interview room.

Here are the candidates who will perform well at an evaluative "questions for us" section:

- People who've read 3 or more "great questions to ask your interviewer" articles on LinkedIn.

- People who coincidentally happen to ask the same things you thought of when you created the grading sheet.

Here are the candidates who won't perform well at this:

- People who cared enough about the position to carefully research your organisation beforehand.

- People who are smart and can figure out your number from the things you speak about, what your office environment looks like, how many times the same employee goes for a smoke break while they're sitting in reception, etc.

- People whose cultural background makes them nervous asking challenging or probing questions to a stranger.

- People who are very interested and ask a lot of questions, but don't ask them in a form or format you were expecting.

- People who largely don't care where they work, this is just where their agent happened to send them.

If you look at the first list, this is concerningly similar to the same list from a now thoroughly discredited practice from 15-20 years ago: the brain teaser. "There are three light bulbs in a room, and three switches outside. How many times do you need to go into the room and why? Do you have any questions for us? Can you second-guess the things we like to be asked?" I don't want to go into full-on rant mode here, although I suspect it's already a bit late, but this is more than a bit arrogant. "I'm interesting, ask me what you think makes me interesting." If you think something about your organisation is interesting, then do the open and honest thing and tell your candidate outright, then see where they take the conversation.

Please, by all means let people ask questions, even suggest to them questions they might want to ask, but make it clear this is non-evaluative and live by that.

In Summary

Some of this may seem far-fetched. I thought it was far-fetched when I hatched most of the original ideas, but I had next to no hiring budget and a whole engineering team to build so you kinda take what works for you and celebrate when it turns out to be really effective.

If you want to take the important bits out:

- Interview skills != actual skills

- Get people doing representative tasks

- Work out if they're heading the same way you are

- I'm going to be insufferable if we get 15 years down the line and I'm right about the Questions For Us thing.